Spectra Assure Free Trial

Get your 14-day free trial of Spectra Assure

Get Free TrialMore about Spectra Assure Free Trial

You probably recognize the following URLs as being “fishy”:

https://googIe[.]by

https://login.officé365[.]com

https://oauth-google[.]com

https://www.paypaI[.]services

And maybe these, too:

http://web.apple.com-secureloginx29376support.minualsdtuch[.]com/

http://drive.goggle.com-secure-attached-file-shared.g00gle.com.millionairecareeracademy[.]com/

http://americanexpress.com.cy.cgi-bin.webscr.cmd.login-submit.dispatch.areyasanat[.]ir/

If you read our previous blog, Catching deceptive links before the click, where we described these most common attacks, you probably recognized the URLs above as being typical typosquat, homoglyph and domain-spoofing attacks. A person may not necessarily have to be a security expert to know when the URL is "a bit off". One might feel that the domain is unusually long, or that there are not that many popular websites ending with the top-level domain .services. But what if an average user saw one of these URLs next to a green lock and letters saying "Secure"? Would they stop to think twice?

As we will show later on, they very well should.

The job of a threat analyst, among other things, involves pivoting through large sets of data in search for information that will, hopefully, give a more complete picture of an emerging threat. URLs are an important part of that data. The ReversingLabs Titanium Platform extracts URLs from files as part of the interesting strings metadata. In order to streamline the analysis process of these large data sets, a unique static URL analysis technology was developed. This new technology deconstructs the URL to basic components, aggregates all the suspicious indicators with regards to their synergy, and assigns a severity level from 1 to 5 to the URL. The URLs with the highest severity level are regarded as malicious, while URLs with a lower score are considered suspicious. Alongside the severity level, descriptive tags are also emitted to provide a complete picture behind the classification.

Unlike popular dynamic analysis services where each link has to be opened in the sandbox (and where it has to be active at the time of the analysis), or network reputation services that aggregate historical whois/DNS data or download history to classify the URL, static analysis gives us a fast and in-depth insight into the URL at any time. Besides providing additional detailed information, this helps analysts prioritize large sets of data, and instantly brings to attention the URLs with the most malicious indicators.

To test the capabilities of our static analyzer beyond the files and metadata used for developing this technology, we analyzed two interesting and large domain name sources: DNS zone files and the Certificate Transparency Log network, and came to some eye-opening conclusions.

If you are not so familiar with DNS zone files, they are basically text files that describe a DNS zone. As a DNS zone is often a single domain, this simply means that a zone file of a top-level domain (TLD) provides a list of domains belonging to that TLD. The list of all generic and country-code TLDs, alongside the authorities responsible for them, is available at the IANA website, while the zone files can be requested from the ICANN Centralized Zone Data Service (CZDS).

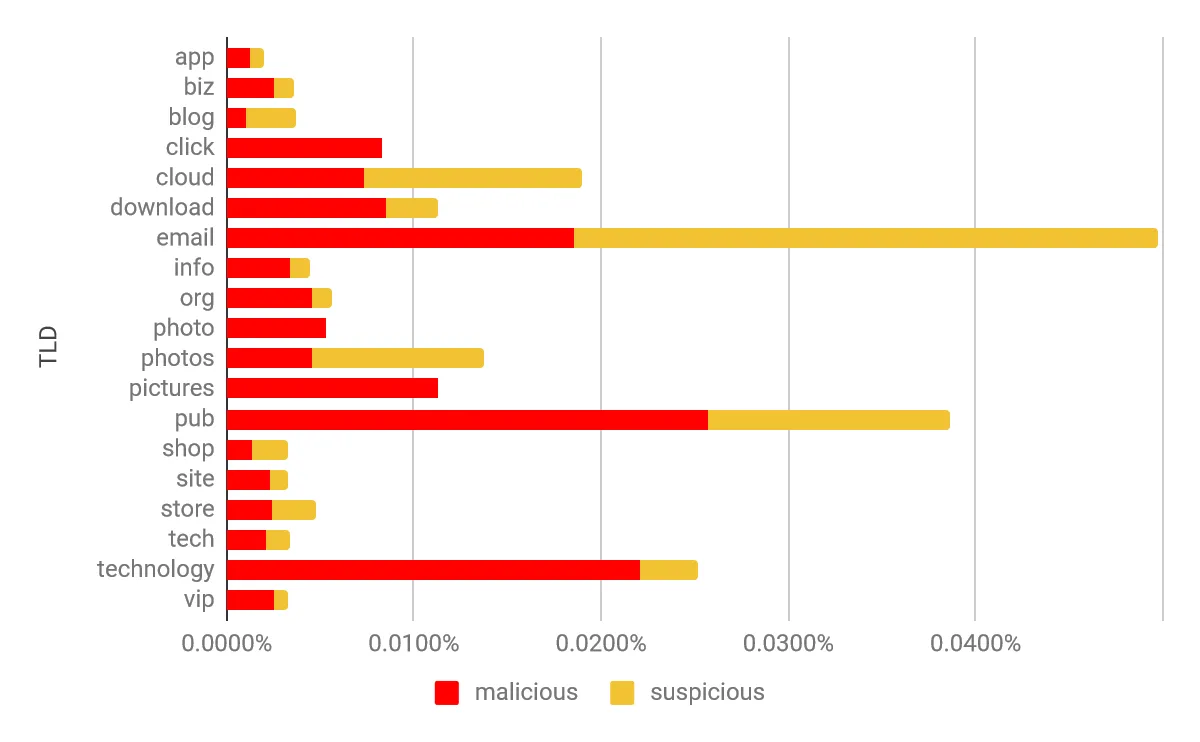

The zone files tested included several generic top-level domains (gTLDs). These zone files included more than 24 million unique records in total, of which 875 were classified with the highest severity level and the highest probability of being malicious. (Note: in this case, the input to the classifier are just the domain names, without any other URL parts that would trigger additional indicators!).

Graph 1 - Percentage of domains classified as malicious/suspicious

As visualized in the graph above, the percentage of domains identified as malicious is more or less the same in every TLD, with the exception of .email, .pub, and .technology having slightly more classified entries. The offending domains were found to be predominantly homoglyph spoofs of popular domains (almost every TLD has at least one variant of Google and Apple spoofs). For example, if a malicious actor wanted to register a homoglyph variant of craigslist.org, they would have a hard time finding an unused domain because the most popular ones are already taken:

craigs1ist[.]org

craigsiist[.]org

craigsilst[.]org

craigsllst[.]org

cralgslist[.]org

cralgsllst[.]org

Some other notable finds are Punycode homoglyph attacks in all shapes and sizes:

gōogle[.]app

gôogle[.]app

göogle[.]info

goögle[.]info

góogle[.]org

googłe[.]org

In the examples above, it is interesting to note that even the “known” TLDs such as .org, which probably won't induce suspicion in an average user, are far from clean of these types of malicious domains.

The second data set we put to the test was the Certificate Transparency (CT) log network. CT is an internet security standard and open source framework for monitoring and auditing digital certificates. This standard creates a system of public logs that seek to eventually record all certificates issued by publicly trusted certificate authorities, allowing efficient identification of maliciously issued certificates. Cali Dog security does a great job of watching, aggregating, and parsing the transparency logs, and presenting all this through simple libraries.

We connected to the endpoint with our analysis module and let it run for several days. The stream outputs roughly more than 3 million entries daily. While it’s worth noting that the stream commonly outputs duplicate entries of the same domain name, the number of unique entries is in the same order of magnitude.

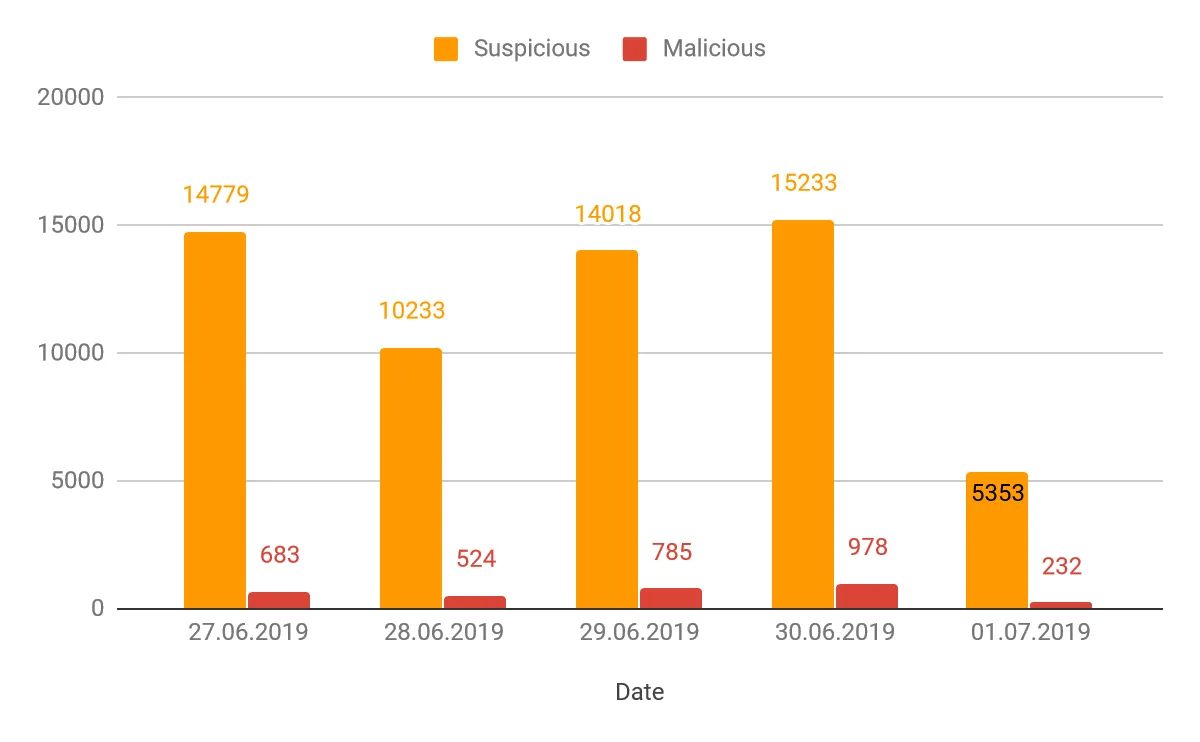

During the 5 days of the module's initial run, it caught 62818 domains that triggered at least one suspicious indicator, of which 3203 entries were classified with the highest severity.

Graph 2 - Number of classified domains per day

In comparison to the total number of entries this may not seem as much, but knowing that almost a thousand new malicious-looking domains get a valid certificate every day should make anyone a bit worried. While you can be almost certain that m.facebook.com.securelogin[.]page is malicious, the real danger lies in all the generic-looking domains that use easily generated dynamic DNS records like manage-accountid.serveirc[.]com.

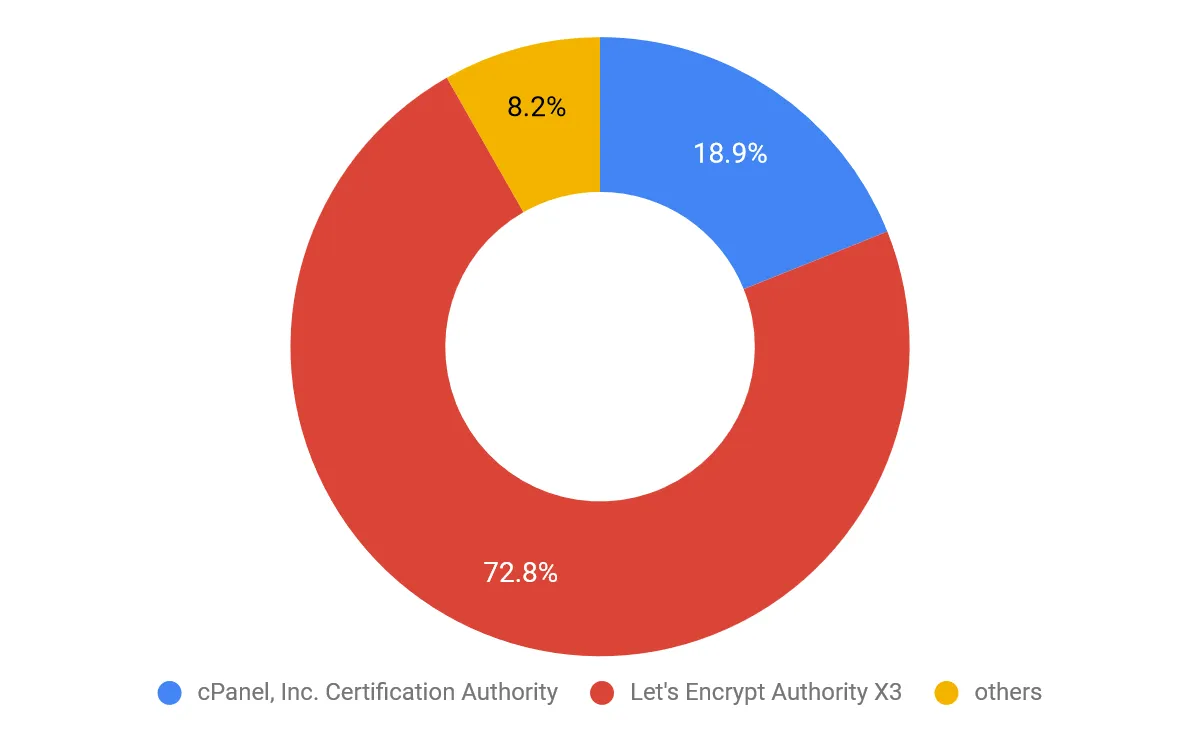

Another obvious thing instantly observable from the results is the distribution of classified domains across different certificate authorities (CA). In total, 72 CAs were observed to have classified domains with at least one suspicious indicator. Out of these CAs, 14 have at least one domain classified with the highest severity (i.e. malicious classification). The distribution of malicious domains is illustrated in the following chart.

Graph 3 - Distribution of malicious domains across CAs

As seen above, “Let’s Encrypt Authority X3” has issued more certificates to suspected malicious domains than all other CAs combined. This is not a surprise, as Let’s Encrypt is known for its free and automated certificate issuing process, but it is still unusual that domain names that are obviously malicious like:

secure5-chase[.]com

secure3-paypal[.]com

01-sberbank[.]ru

do not raise any red flags during the certificate issuing process.

Using the latest open source phishing frameworks, the whole social engineering process is incredibly streamlined. As hinted in one of the domains caught (abs.twitter.com.evilginx2.iamxgigo[.]ga), one of these tools is evilginx2, capable of efficiently capturing two-factor authentication. To get it up and running with a valid SSL certificate, an average user needs less than a few minutes. Because this process is so streamlined, a malicious actor can easily change the parameters of the attack during the attack itself, making it difficult to efficiently respond. Even if the URL is analyzed and blacklisted only few minutes after its first appearance, this still provides enough time for a targeted spear phishing attack to succeed.

Having an immediate insight into all URLs that come into the environment using static URL analysis provides a significant advantage to simply relying on domain blacklisting. Combine that with the rest of the Titanium platform to efficiently detect and get insight on emerging threats.

Read our prior blog in the series on Catching deceptive links before the click

Explore RL's Spectra suite: Spectra Assure for software supply chain security, Spectra Detect for scalable file analysis, Spectra Analyze for malware analysis and threat hunting, and Spectra Intelligence for reputation data and intelligence.

Get your 14-day free trial of Spectra Assure

Get Free TrialMore about Spectra Assure Free Trial