Spectra Assure Free Trial

Get your 14-day free trial of Spectra Assure

Get Free TrialMore about Spectra Assure Free Trial

As application security professionals and developers seek ways to both prevent new flaws and manage existing vulnerabilities in software, the problems of scale and limited time inevitably rear their heads. Whether it is rooting out vulnerabilities before shipping code, or remediating flaws already in production, there's rarely enough time to get to everything and meet top-line business objectives.

This is why vulnerability prioritization is such a huge factor in securing software. Most security practitioners, developers, and DevOps teams understand this concept in principle. But when it comes to executing on it with effective prioritization, they consistently struggle.

Even though the vulnerability management discipline has had decades to evolve, most organizations still struggle to effectively prioritize when and which flaws to fix or mitigate first in their software, their supply chain dependencies, and their application programming interfaces (APIs).

There are a lot of factors that go into that struggle, but security experts increasingly believe that many of them come down to an unfortunate addiction to the holy trinity of vulnerability management. Vulnerability management programs and their tooling depend far too heavily on vulnerability information from the Common Vulnerability Enumeration (CVE), the National Vulnerability Database (NVD) which is fed by CVE data, and the simplistic severity rating of the Common Vulnerability Scoring System (CVSS). This over-reliance on CVEs, the NVD, and CVSS is leading to so many software security programs running to stand still.

They struggle to appropriately gauge risk of any given vulnerability to their actually deployed software and their environments. They have a difficult time detecting errors in CVE information. And they can't effectively use the NVD to manage software supply chain risks. Consequently, they're working harder than ever but can't keep up with the scope of vulnerabilities, which keep ballooning in the CVE/NVD.

Here's what you need to know about app sec's addiction to vulnerability reporting — and why application security needs to evolve to take on supply chain security.

See Special Reports: The Evolution of Application Security NVD Analysis: A Call to Action on Software Supply Chain Security

First instituted in 1999, the CVE system initially cut its grand-opening ribbon with a scant 321 published vulnerabilities through the work of MITRE. By 2004, about a year before the NVD was created through funding by the Department of Homeland Security, the database's predecessor, the Internet Category of Attack (ICAT) had about 10,000 published CVEs.

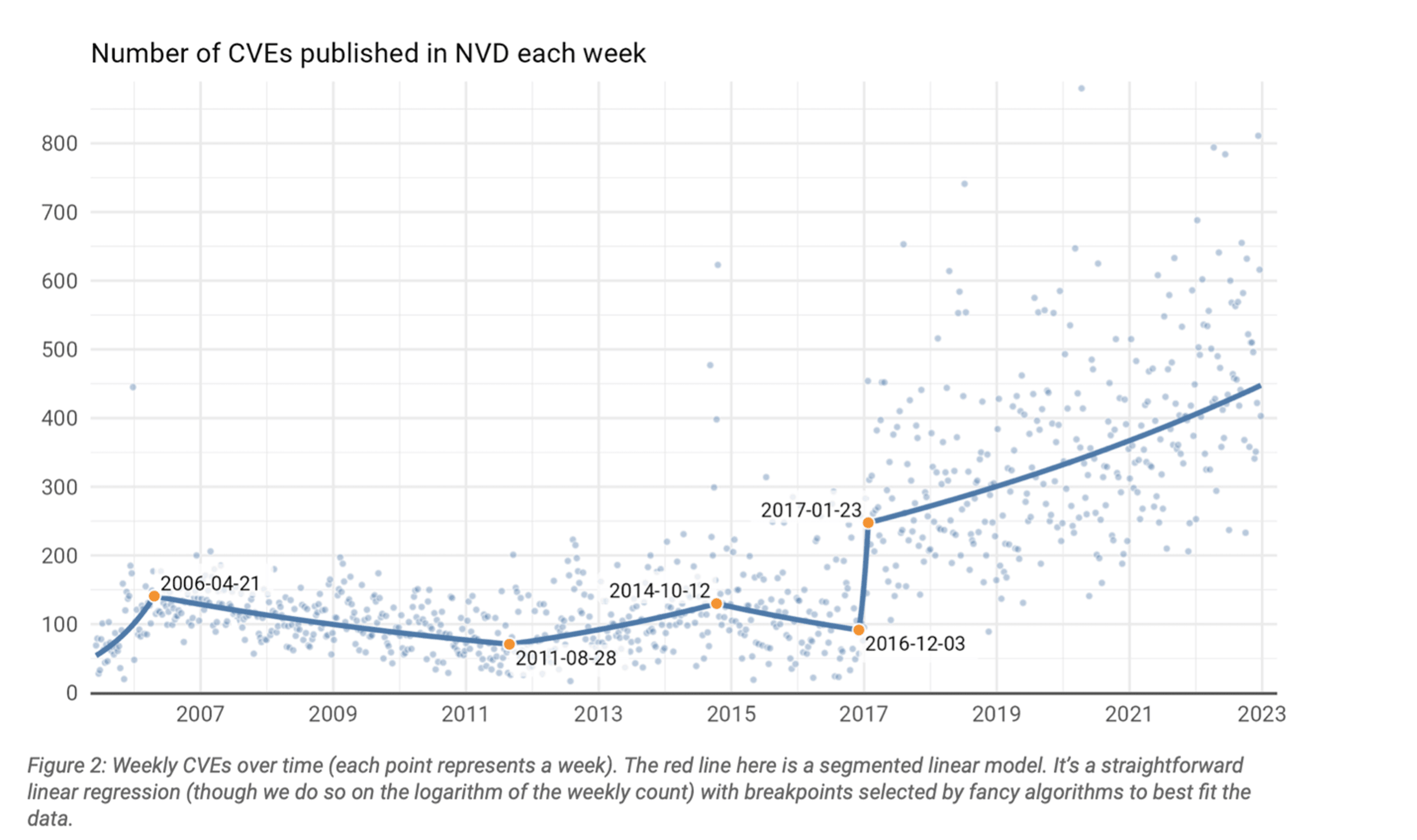

In 2022 alone, the number of CVEs published in a year exceeded 26,000. And at the end of 2022, the number of total published CVEs has snowballed to 190,971. Per a recent report by Cyentia Institute of the CVE landscape, by 2025 the median weekly rate of CVE publication will hover around 800 and could hit high points at around 1,200, the report highlighted.

Source: Cyentia Institute/F5

Cyentia Institute CVE landscape reportSince each new vulnerability represents a theoretically new component in an attack vector, and since old vulnerabilities never really go away, defenders will face an escalating number of distinct attack vectors. The most urgent question facing CISOs today is not 'will I be attacked?' (yes) or 'when will I be attacked?' (today), but 'how will I be attacked?' This means that the task of triaging new CVEs and prioritizing patches will become an increasingly significant part of security operations.

The whole point of CVE was a worthy goal at the time and still is even now for the task of standardizing the way developers and security pros identify and track flaws across different products and vendors, says Percy Grunwald, site reliability engineer (SRE) for Cisco Meraki and a full stack development veteran.

Percy GrunwaldThis standardization is important because it enables organizations to efficiently manage their vulnerability management programs. Rather than having to manually track vulnerabilities for each product or vendor, organizations can rely on CVEs to keep them informed about newly discovered vulnerabilities and their associated patches.

At the same time the CVE system has a number of limitations, he explains. In spite of the rapidly accumulating CVEs published in the NVD, the system has incomplete coverage that leads to "blind spots."

Many of the most regrettable holes in the database are not just yet-unknown zero day vulnerabilities, but also those that are known by vendors and hidden by the 'RESERVED' marker in CVE/NVD that can obfuscate details about a flaw for extended periods of time, wrote longtime app sec pundit Mark Curphey in a recent two-part essay (See Part 1 and Part 2) on the woes of CVE/NVD.

Mark CurpheyThe usual reason given is that they have long release cycles and need to coordinate disclosure to their customers. While true for some, it's just bullshit for most. Of course when a vague reserved CVE is published, hackers do what we did at SourceClear. Unleash the bloodhounds, pour through the commits, find the vulnerability and build the exploit.

Additionally, CVEs lack valuable contextual information about the impact of a flaw on a specific environment, says Grunwald. "This means that organizations may not have a clear understanding of the risk that a vulnerability poses to their specific business operations," he says. "For example, a vulnerability may be rated as high severity, but if it does not affect a critical asset or business operation, it may not pose a significant risk to the organization."

Now, because of the constant expansion of the scope of flaws within the NVD, most organizations are always on the hunt for tooling that "automagically" detects and prioritizes vulnerabilities for them, says Walter Haydock, founder and CEO of StackAware.

Unfortunately, much of the automated tooling that organizations lean on today still relies solely on the scantily contextualized CVE information from the NVD, paired only with CVSS severity ratings (the limitations of which we'll tackle in a second). Under the hood the outputs of many vulnerability management scanners and platforms are still mostly just a function of CVE and CVSS information.

Overweighting is the issue facing the CVE system in a nutshell, explains Gary McGraw, the founder of Cigital and one of the grandfathers of modern app sec.

Gary McGrawThe idea of having a common way of describing these things, it's a great idea. Having that drive everybody's product is silliness.

But that's what happens so often in the average vulnerability management program. They'll point their scanners at their software portfolio to hunt out critical and high severity vulnerabilities and end up coming up with a list of flaws flagged for fixes that's not at all tuned to the real state of risk within their software, says Haydock.

"I've seen vulnerabilities that are CVSS 9.8, so critical we think they'll be the highest priority, but when you look at the explanation of the vulnerability, it says, 'Oh, actually this is only exploitable when the software is deployed in a non-default configuration,'" Haydock says. "At that point, it's not even really a security vulnerability. It's kind of just being silly."

In addition to contextual issues, there's also the problem of just plain wrong CVEs. Curphey says so much of the pain caused by CVEs can be related back to the old adage of garbage in, garbage out. As he and Haydock note, the NVD doesn't really validate CVEs. Once a vendor, researcher, or other software stakeholder is vetted as a CVE Numbering Authority (CAN), they can create CVEs that contain errors, inaccuracies, or other wrong information, without tools or programs depending on that information knowing any better, says Haydock.

Walter HaydockI think 99.9% of the people who are submitting these things are doing it in good faith, but they might be wrong. They might be incorrect about something.

One noteworthy statistic that highlights the error issue in high contrast comes by way of the Dragos 2022 Year in Review report. A critical infrastructure security vendor, Dragos is a leading provider of threat intelligence in the operational technology (OT) world. As a part of that work, it analyzes OT-related CVEs. In its report, it found that a little over a third of OT-related CVEs had errors in them. These are not vulnerabilities in insignificant or small systems. They are flaws impacting some of the most sensitive systems in the world that run power plants, water treatment facilities, and major manufacturing facilities.

In addition to errors, there's the problem of CVE noise. This is caused by a high volume of flaws published by any army of security researchers bolstering their resumes and building up street-cred by filing CVEs for esoteric edge-case vulnerabilities that would be extremely hard to replicate in real-world deployments, Haydock says.

Walter HaydockA lot of these things in the NVD are what I refer to as 'science projects'. For example, there are the type of vulnerabilities in there that describe scenarios like the one that shows you can conduct a denial of service attack against a certain piece of software by blasting a radio loud enough next to it. That may certainly be true, but what does that tell you from a security perspective? Not too much.

Both CVEs and CVSS have systematic weaknesses that prevent organizations from using them to effectively prioritize, Haydock says. On the NVD and CVE side, he still believes there is a wealth of information, much of it useful. He thinks the gaps and problems with the way things are recorded is a salvageable situation. As for CVSS, he's a whole lot darker.

Walter HaydockThe numerical way that CVSS is put together ... I don't think that's salvageable. While well-intentioned and possessing some useful components, CVSS is a fundamentally flawed method for prioritizing the remediation of software vulnerabilities.

One of the fundamental issues is another contextual problem. CVSS severity ratings float out in the ether without enough information on exploitability or impact of a flaw, and are disconnected from the business importance of the affected asset in any given deployment situation, Grunwald says.

Percy GrunwaldFor example, a vulnerability may be rated as high severity, but if it does not affect a critical asset or business operation, it may not pose a significant risk to the organization.

Another huge issue is that the 1.0 through 10.0 scale implies the worst vulnerability is only about ten times worst than the least severe one, says Haydock, who says all evidence shows this not to be true. "Vulnerabilities follow a power-law distribution whereby a very small number are incredibly consequential while most pose little risk," he says. "Even when incorporating its environmental and temporal metrics, the CVSS does not reflect this reality."

The cost surrounding real-world attacks of certain vulnerabilities illustrates how distorted the rating scale can be compared to real-world risk. Something like a Log4JShell vulnerability could cause billions of dollars in damages across the software supply chain, while many known vulnerabilities don't result in any monetary losses.

Walter HaydockEven presuming CVSS scores correlate with a vulnerability’s exploitability or impact, there is no way to compare groups of vulnerabilities with each other or the cost of mitigating them using the CVSS’ qualitative system. It is impossible to say whether 10 'critical' vulnerabilities are less or more concerning than 100 'high' ones.

And then there is the whole issue of the CVE/NVD's relationship with software supply chain security, Curphey says.

Mark CurpheyCVE/NVD doesn't have the right data to reproduce an open source vulnerability, let alone automate detection.

ReversingLabs' recent NVD Analysis report pointed out how the NVD is still dominated by legacy platforms from the likes of Microsoft, Red Hat, Google, Apple and Oracle:

This rise in software supply chain attacks is a call to action for NIST. NVD is a critical resource for both software development and security organizations. To remain relevant, however, the scope of NVD needs to expand to capture the full breadth of vulnerable platforms and applications, as well as the diversity of security exposures (the “E” in CVE) — including malware injections, software tampering and secrets exposure, which threaten supply chain integrity.

Curphey thinks that the only way to solve this is through specialized vulnerability databases, particularly a specialized database for open source libraries, as well as a standards-based protocol to connect and maintain them, he writes.

Mark CurpheyIt is not a matter of summing up library use alone, but a complex model of weighted scores based on how and where they are used. It's also worth pointing out that what is critical to one industry may not be critical to another. No one size fits all.

Haydock says that he can't see why NVD couldn't handle some of that work. He agrees that identifying specific packages in the NVD and disambiguating them is a problem but he sees work being made on this front. "I do see some initiatives underway using package URLs to clarify exactly which piece of software you're talking about," he says.

He adds that private vulnerabilities could be a problem if they're just a slightly modified copy of how the NVD does it.

Ultimately, most software security experts and vulnerability experts don't see CVEs as an inherently flawed system. Instead, it's how organizations and tooling uses them, McGraw says.

Gary McGrawIf (CVEs and the NVD) can help you do better security engineering and prioritize your limited resources, that's great. Yay. But if it is misleading you into prioritizing your limited resources incorrectly, stop.

The problem, of course, is how a security leader will know they're being mislead. This is going to come down to sound risk management practices. Haydock says organizations need to stop "robotically" accepting the outputs from tools and databases at face value. They need to use likelihood of occurrence of an event from flaws and predicted impact making prioritization choices, he says. That will take work of sitting down at the table with senior leadership to talk about business importance of certain assets, using additional metrics like the Exploit Prediction Scoring System (EPSS), and coming up with more complex methods for weighting and scoring risks.

Grunwald concurs, stating that CVEs should never be seen as a silver bullet. To effectively manage their security posture, organizations need to adopt a more dynamic and risk-based approach that incorporates real-time threat intelligence and contextual information, he says.

Percy GrunwaldThis involves prioritizing vulnerabilities based on their potential impact on critical assets and business operations, rather than solely relying on CVE severity ratings. By taking into account the context of their specific environment, organizations can more accurately assess the risk that a vulnerability poses and prioritize their remediation efforts accordingly.

Explore RL's Spectra suite: Spectra Assure for software supply chain security, Spectra Detect for scalable file analysis, Spectra Analyze for malware analysis and threat hunting, and Spectra Intelligence for reputation data and intelligence.

Get your 14-day free trial of Spectra Assure

Get Free TrialMore about Spectra Assure Free Trial