Rhode Island disclosed in December that a ransomware attack had resulted in a data breach of its RIBridges social services database, exposing personal data of about 650,000 residents that included Social Security numbers, dates of birth, and individual bank account numbers. The impact was enormous — more than half of the state’s population was affected.

Rhode Island disclosed in December that a ransomware attack had resulted in a data breach of its RIBridges social services database, exposing personal data of about 650,000 residents that included Social Security numbers, dates of birth, and individual bank account numbers. The impact was enormous — more than half of the state’s population was affected.

It was just the last in a series of malware-related disasters in 2024, and more seem likely to come. While companies continue to invest more in their security efforts, dangerous and costly malware is still slipping through their defenses. Whether it’s someone clicking on a malicious email attachment, file sharing and collaboration tools spreading malware-infected documents, or an employee downloading and running a legitimate application that nonetheless contains destructive files and objects, malware has multiple routes into an organization.

The traditional approach to malware detection is to send everything to a sandbox — a dynamic analysis engine that tests files by executing them in a controlled, isolated environment to examine the behavior and identify any malicious activity. Sandboxes have long been seen as a silver bullet for protecting against malicious files. Unfortunately, that’s no longer the case.

Today’s enterprise organizations are dealing with tens of thousands of files, if not more, entering their network each day that need to be analyzed. This is where the shortcomings of traditional sandboxes and dynamic analysis start to surface in the form of long processing times, security workflow bottlenecks, and worse, potentially malicious files going undetected. And while additional compute resources can be added to a sandbox to try to handle the increased loads and keep pace, this can become cost-prohibitive very quickly and still not solve the problem.

Here's what you need to know about moving beyond the sandbox to secure your organization against malicious files.

[ See Webinar: Don’t Get Walled In By Your Sandbox ]

Where sandboxes fall short

It’s not just the sheer volume of files that poses a challenge for sandboxes; it’s also the increased size and complexity of files, with large, multilayered files becoming the new normal. The reality is that sandbox technology employing dynamic analysis has inherent limitations in the types and sizes of files that can be processed, with size usually restricted to no more than 500MB. This leaves a lot of files that can’t be analyzed by the sandbox. That’s a big problem for security operations centers (SOCs), which are increasingly faced with large, complex file structures, including a range of multimedia file types, multi-gigabyte virtual machine disk files, and large language model (LLM) file formats.

In many cases, when a sandbox is unable to analyze a file because of those limitations, it issues an ambiguous “malware not found” result, sometimes called a “fail open,” and the file is allowed to pass through to the network. The file is assumed to be good because it was passed through, but the fact is that malware was not detected because the system didn’t analyze the file.

Making matters worse, malware developers continue to hone their craft, developing more sophisticated malware tactics to evade sandbox detection. One common evasion tactic is to delay malicious code execution by building in a time delay before the malware unpacks. Another evasion tactic in malware programs is to employ user-activity checks, such as analyzing patterns of mouse clicks, that lets the malware determine whether it is running in a sandbox environment so it can delay executing its payload.

Code obfuscation is yet another evasive malware technique used by threat actors. Obfuscation occurs when the contents of a file are altered, such as through tactics such as encryption, packing, compression, or encoding, in order to make the malicious code undetectable.

Sandboxes clearly have inherent limitations and drawbacks, but they’re still a useful tool. So what’s the right path forward when it comes to effective malware detection? The first step is to recognize that not every file needs dynamic analysis.

How RL's binary analysis can help

The ReversingLabs Advanced Malware Analysis Suite was developed to address this issue by employing an AI-driven, high-speed static binary analysis engine. RL's proprietary analysis engine performs fully recursive file deconstruction, deobfuscating and unpacking the underlying object structure to its base elements, extracting all internal indicators and metadata to determine the file intent and capabilities.

Since the file is not executed, the process takes only seconds — and doesn’t suffer from the typical constraints on file type or size. In fact, RL provides the broadest file coverage in the industry, with support for 10GB file sizes along with more than 4,800 file formats and 400 different packers (the compression/archiving tools malware developers may use to obfuscate malware code). And RL's recursive binary analysis process isn’t subject to the evasion techniques that can disguise malware in sandboxes.

By starting with this advanced static analysis process, organizations can analyze and definitively classify more files immediately, drastically reducing what needs to be sent to a sandbox. Any files requiring dynamic analysis can then be run either through RL’s built-in cloud sandbox or a third-party sandbox. This approach reduces sandbox needs by roughly 90%, saving organizations both time and money.

Applying the right analysis technique at the right time is key to optimizing malware detection, not only improving resource and cost efficiencies, but also delivering more accurate threat verdicts.

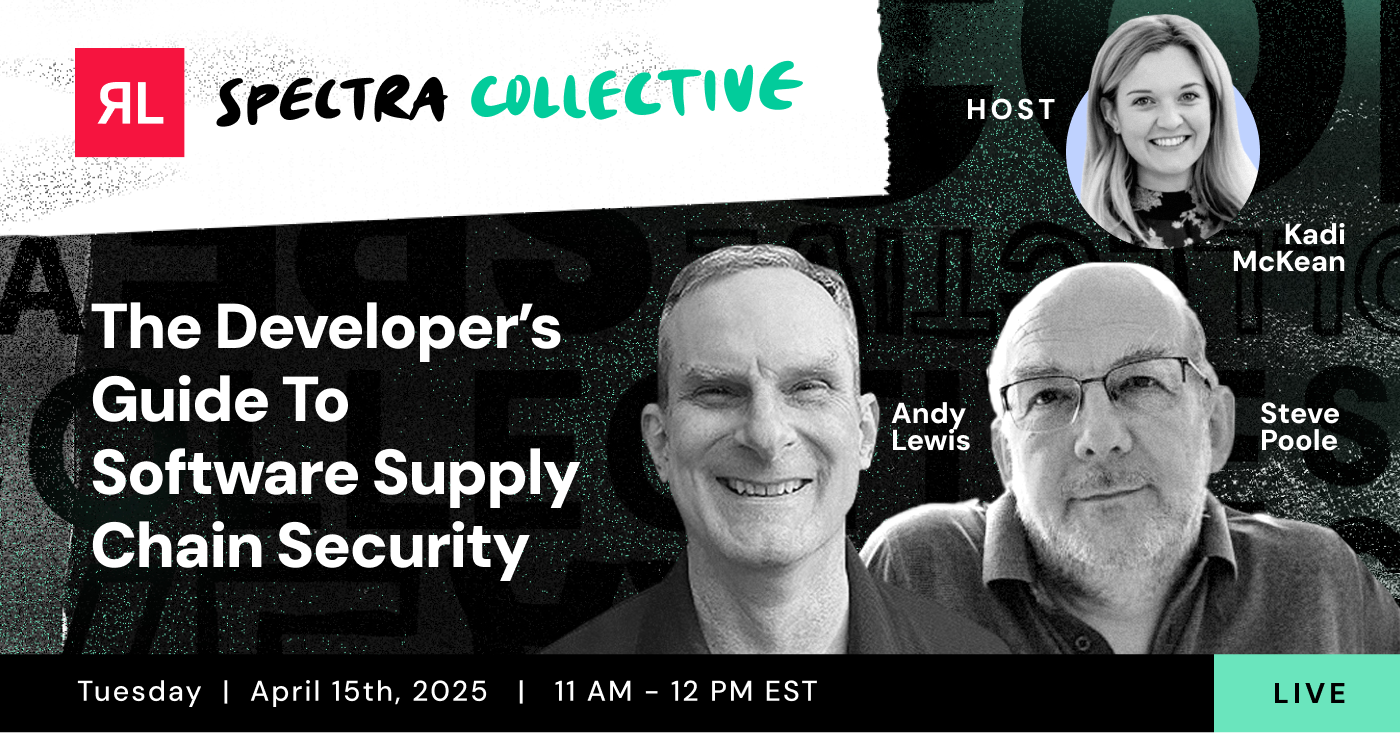

Explore RL's Spectra suite: Spectra Assure for software supply chain security, Spectra Detect for scalable file analysis, Spectra Analyze for malware analysis and threat hunting, and Spectra Intelligence for reputation data and intelligence.