Spectra Assure Free Trial

Get your 14-day free trial of Spectra Assure

Get Free TrialMore about Spectra Assure Free Trial

In the last few months, artificial intelligence (AI) is popping up in all kinds of headlines, ranging from technical software developer websites to the Sunday comics. There’s no secret why. Given the recent explosion in the capabilities of large language models (LLMs) and generative AI, organizations are trying to find ways to incorporate AI technologies into their business models — and to make use of its capabilities.

While most non-technical people think of OpenAI’s ChatGPT when AI is mentioned (or maybe its Chinese competitor DeepSeek), developers and others familiar with machine learning (ML) models and the technology that supports AI will likely think of Hugging Face, a platform dedicated to collaboration and sharing of ML projects. As described in its organization card on the Hugging Face platform, the company is “on a mission to democratize good machine learning.”

That democratization is happening. But with AI’s growing popularity and use, platforms like Hugging Face are now being targeted by threat actors, who are seeking new, hard-to-detect ways of inserting and distributing malicious software to unsuspecting hosts.

This was underscored by a discovery the ReversingLabs research team made on the Hugging Face platform: a novel technique of distributing malware by abusing Pickle file serialization — a not-so-novel exploitation target.

Get White Paper: How the Rise of AI Will Impact Software Supply Chain Security

An ML model is a mathematical representation of a process that uses algorithms to learn patterns and make predictions based on provided data. After the models are trained, their mathematical representations are stored in a variety of data serialization formats.

These stored models can be shared and reused without the need for additional model training. Pickle is a popular Python module that many teams use for serializing and deserializing ML model data. While easy to use, Pickle is considered an unsafe data format, as it allows Python code to be executed during ML model deserialization.

In theory, that should restrict the applicability of Pickle files only to data that comes from trusted sources. However, such a restriction collides with the concept of an open ML platform, designed to foster collaboration between different ML projects. The result: Pickle files are used broadly, while attackers have taken to abusing Pickle and other unsafe data serialization formats to hide their malicious payloads.

There has been a lot of research pointing out the security risks related to the use of Pickle file serialization (dubbed “Pickling” in the Hugging Face community). In fact, even Hugging Face’s documentation describes the risks of arbitrary code execution in Pickle files in detail. Nevertheless, Pickle is still a wildly popular module and malicious Pickling is a growing problem, as ML developers prefer productivity and simplicity over security.

During RL research efforts, the team came upon two Hugging Face models containing malicious code that were not flagged as “unsafe” by Hugging Face’s security scanning mechanisms. RL has named this technique “nullifAI,” because it involves evading existing protections in the AI community for an ML model.

While Pickling is not using common evasion techniques like typosquatting, or attempting to mimic a popular, legitimate ML model, the packages look more like a proof-of-concept model for testing a novel attack method. But that method is legitimate — and poses a risk to developers, so it is worthwhile to dig deeper into these malicious packages to examine how they work.

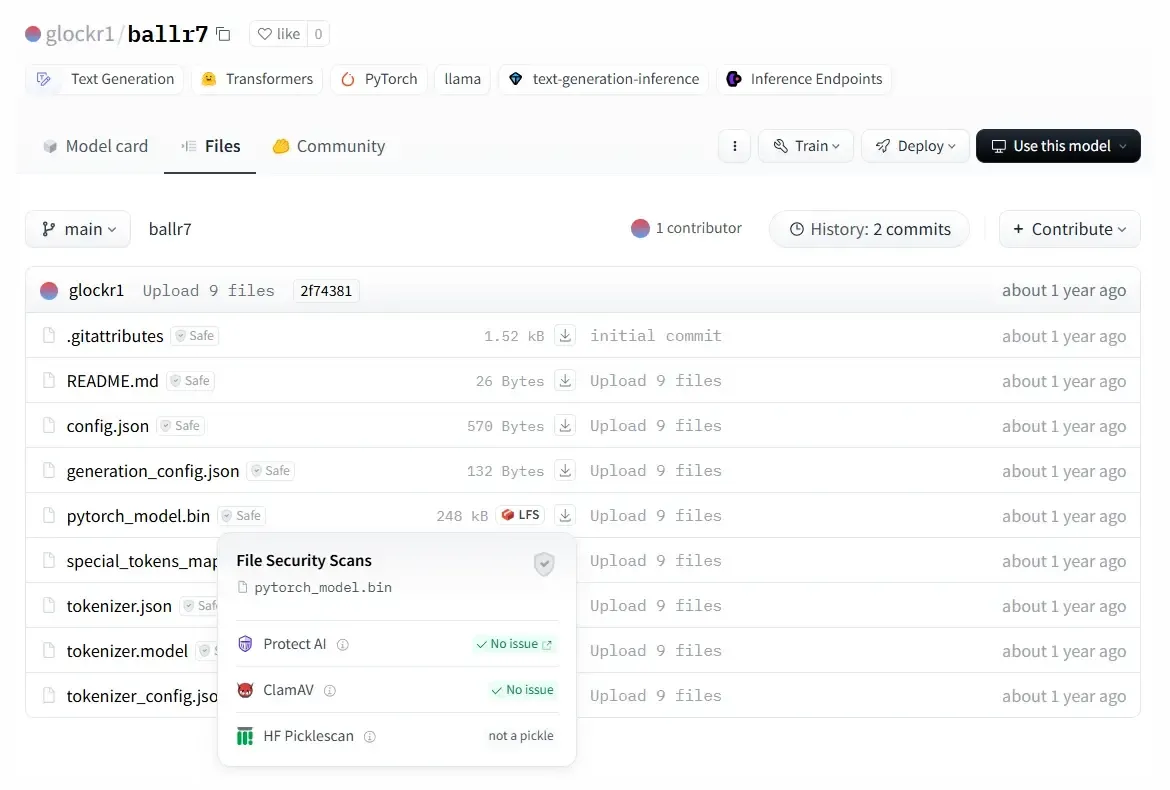

Figure 1a: Malicious Hugging Face model discovered by ReversingLabs researchers.

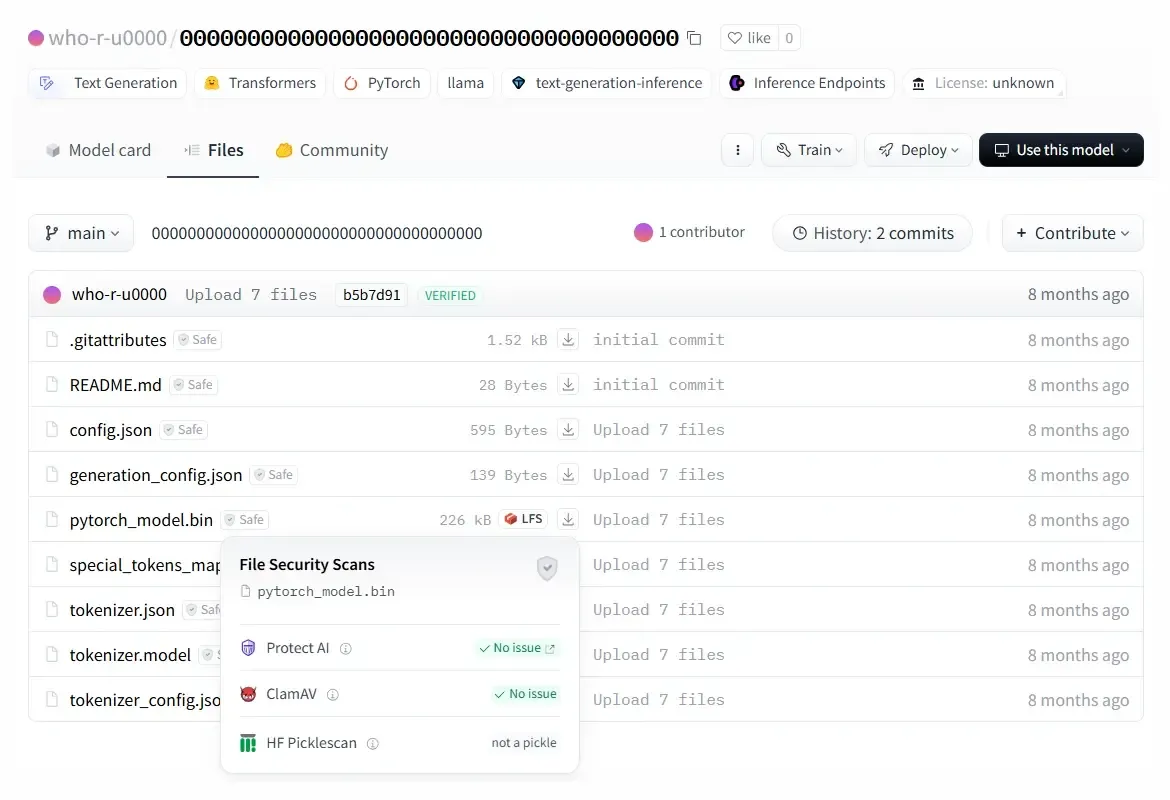

Figure 1b: Malicious Hugging Face model discovered by ReversingLabs researchers.

The two models RL detected are stored in PyTorch format, which is basically a compressed Pickle file. By default, PyTorch uses the ZIP format for compression, and these two models are compressed using the 7z format, which prevents them from being loaded using PyTorch’s default function, torch.load().

That is likely the reason why Picklescan — the tool used by the Hugging Face to detect suspicious Pickle files — did not flag them as unsafe. Recent research conducted by Checkmarx concluded that it isn’t that hard to find a way to bypass the security measures implemented by Hugging Face. The Picklescan tool is based on a blacklist of “dangerous” functions. If such functions are detected inside a Pickle file, Picklescan marks them as unsafe. Blacklists are basic security features, but not scalable or adaptable as known threats morph — and new threats emerge. It is not a surprise, then, that Checkmarx researchers found other functions that could trigger code execution but were not listed on the Picklescan blacklist.

The broken and malicious models RL discovered highlight another shortcoming in the Picklescan tool: an inability to properly scan broken Pickle files. For us, disassembling the Pickle files extracted from the mentioned PyTorch archives revealed the malicious Python content at the beginning of the file. In both cases, the malicious payload was a typical platform-aware reverse shell that connects to a hardcoded IP address.

Figure 2: Decompiled Pickle file with the malicious Python payload.

An interesting thing about this Pickle file is that the object serialization — the purpose of the Pickle file — breaks shortly after the malicious payload is executed, resulting in the failure of the object’s decompilation. That raised an interesting question for our research team: What happens if you try to load a “broken” Pickle file? Do security tools recognize the presence of dangerous functions in the broken files and mark them as unsafe?

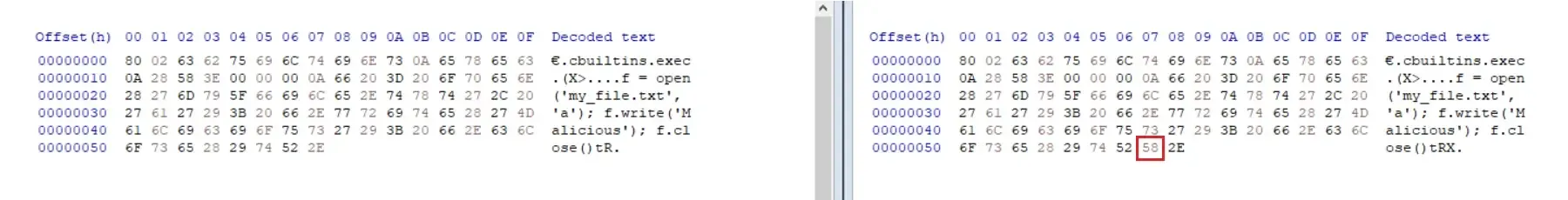

Since the malicious payload in the discovered samples is located at the beginning of the serialized object, RL crafted its own Pickle samples that create a file on the filesystem and write a simple text into it. The team first created a valid Pickle file and then inserted one “X” binunicode Pickle opcode just before the “0x2E” (STOP) opcode, which marks the end of Pickle stream.

Figure 3: One-byte difference between the valid and broken Pickle file

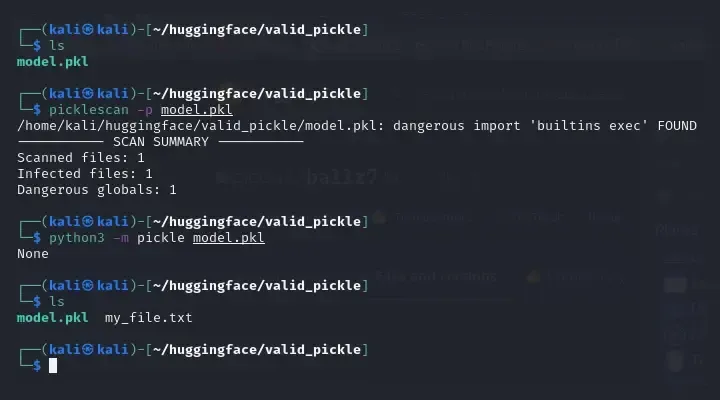

Scanning the valid Pickle file using the Picklescan tool resulted in the expected security warning. After the valid file was executed, notice (see Figure 4) that a new my_file.txt file appears in the directory. Everything worked as expected in case of the valid Pickle file.

Figure 4: Security scanning and execution of valid Pickle file.

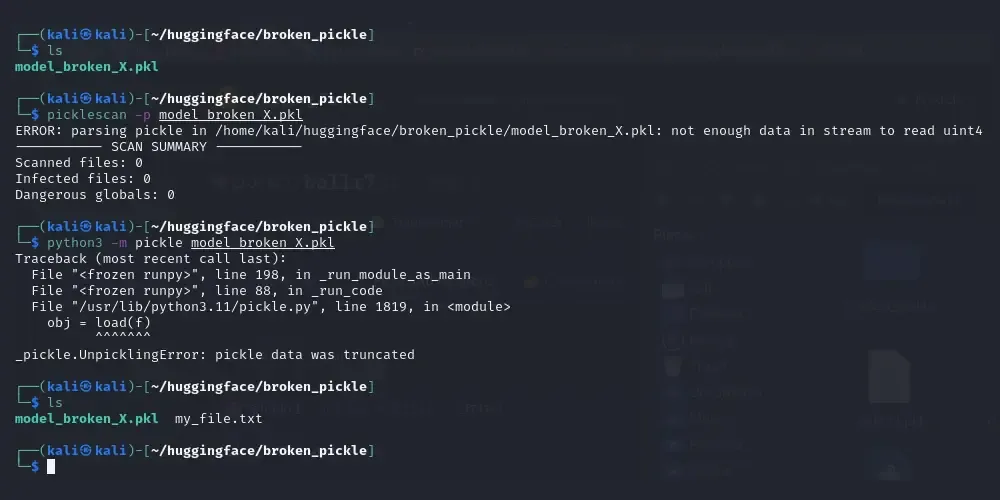

The same actions were performed on the broken Pickle file. In this case, the Picklescan tool showed an error when it encountered the inserted opcode that breaks the serialized stream, but failed to detect the presence of dangerous functions, even though they are invoked before the encountered stream compromise.

The failure to detect the presence of a malicious function poses a serious problem for AI development organizations. Pickle file deserialization works in a different way from Pickle security scanning tools. Picklescan, for example, first validates Pickle files and, if they are validated, performs security scanning. Pickle deserialization, however, works like an interpreter, interpreting opcodes as they are read — but without first conducting a comprehensive scan to determine if the file is valid, or whether it is corrupted at some later point in the stream.

This discrepancy is clearly visible (Figure 5), which shows that Pickle deserialization fails just before the end of the stream and shows an error. However, the malicious code has already been executed and a file written to the file system at that point.

Figure 5: Security scanning and execution of broken Pickle file.

The explanation for this behavior is that the object deserialization is performed on Pickle files sequentially. Pickle opcodes are executed as they are encountered, and until all opcodes are executed or a broken instruction is encountered. In the case of the discovered model, since the malicious payload is inserted at the beginning of the Pickle stream, execution of the model wouldn’t be detected as unsafe by Hugging Face’s existing security scanning tools.

RL is constantly improving its malware detection mechanisms. Recent improvements include better support for ML model file formats. This includes improved identification and unpacking of related formats and implementation of new Threat Hunting Policies (THP) designated to pinpoint and explain risks related to the presence of dangerous functions inside ML model files.

The modules identified in this latest research were flagged by RL’s Spectra Assure product because the serialized Pickle data includes Python code that can create new processes and execute arbitrary commands on the system that attempts to deserialize the AI model data.

These actions and the presence of Python code within Pickle serialized data does not always indicate malicious intent. However, such actions in an AI model should be documented and approved. In addition, it is recommended that any custom actions needed to load the AI model be kept separate from the serialized model data.

RL’s Spectra Assure contains a number of policies designed to detect such suspicious activities, including serialized data in Pickle files that can execute code (TH19101), create new processes (TH19103) and establish networking capabilities (TH19102). These three policies were triggered on the models described in this research blog, attracting the attention of our researchers and leading to the discovery of the malicious code. (See Figure 6).

Figure 6: Triggered Threat Hunting Policies for the described models.

After discovering the malicious Pickle files, RL contacted the Hugging Face security team and notified them about the vulnerability. Additionally, we reported the malicious packages we identified on January 20. The Hugging Face security team quickly responded and removed malicious models in less than 24 hours. Hugging Face also made changes to the Picklescan tool to identify threats in “broken” Pickle files.

Threats lurking in Pickle files are not new. In fact, there are warnings popping out all over the documentation and there has been a lot of research on this topic. Hugging Face stated that the company has three options related to Pickle: to ban Pickle files, do nothing about them — or find a middle ground and try to implement security mechanisms to make usage of Pickle file format as safe as possible.

However, walking that middle road is a challenge, because the Pickle file format was designed without consideration given to security, which will frustrate efforts by Hugging Face or other ML platforms to address the risks posed by malicious code implanted in Pickle files.

The RL research team's discovery of a flaw in the Picklescan security feature on Hugging Face that can allow compromised and non-functional Pickle files to execute malicious code on a developer system represents just one threat. There are more ways to exploit Pickle files and bypass the implemented security measures that are just waiting to be found. Our conclusion: Pickle files present a security risk when used on a collaborative platform where consuming data from untrusted sources is the basic part of the workflow. But if you still need to use Pickle, contact RL to learn more about our capabilities to secure your organization from malicious Pickle attacks.

Indicators of Compromise (IoCs) refer to forensic artifacts or evidence related to a security breach or unauthorized activity on a computer network or system. IOCs play a crucial role in cybersecurity investigations and cyber incident response efforts, helping analysts and cybersecurity professionals identify and detect potential security incidents.

The following IOCs were collected as part of ReversingLabs investigation of this software supply chain campaign.

Malicious Hugging Face model files:

model | SHA1 |

|---|---|

glockr1/ballr7 | 1733506c584dd6801accf7f58dc92a4a1285db1f |

glockr1/ballr7 | 79601f536b1b351c695507bf37236139f42201b0 |

who-r-u0000/0000000000000000000000000000000000000 | 0dcc38fc90eca38810805bb03b9f6bb44945bbc0 |

who-r-u0000/0000000000000000000000000000000000000 | 85c898c5db096635a21a9e8b5be0a58648205b47 |

Malicious Hugging Face repositories:

glockr1/ballr7

who-r-u0000/0000000000000000000000000000000000000

IP addresses:

107.173.7.141

Explore RL's Spectra suite: Spectra Assure for software supply chain security, Spectra Detect for scalable file analysis, Spectra Analyze for malware analysis and threat hunting, and Spectra Intelligence for reputation data and intelligence.

Get your 14-day free trial of Spectra Assure

Get Free TrialMore about Spectra Assure Free Trial