Spectra Assure Free Trial

Get your 14-day free trial of Spectra Assure

Get Free TrialMore about Spectra Assure Free Trial

The Open Worldwide Application Security Project (OWASP) is preparing a Top 10 list of large language model projects on an accelerated timetable in response to the rapid development and deployment of LLMs such as OpenAI's ChatGPT and AI-based software development tools such as Microsoft's GitHub Copilot.

OWASP is now working on an eight-week plan to finalize the first version of the list, and the project has garnered a lot of interest. Steve Wilson, project leader and chief product officer at Contrast Security, said he was surprised by the degree of interest from the security and developer community.

Steve WilsonI thought there'd be 10 or 15 people in the world interested in this, but the last time I checked, I had 260 people signed up on the expert group. We've decided as a group that we're going to be very aggressive about getting out a first version of the document so people have some guidance. That's why we developed the eight-week road map.

Wilson said the driver for the OWASP Top 10 for LLMs was the fact that there is so little guidance for software teams in the industry, "so the most important thing is to get something out that's going to outline the most important threats and the best way to mitigate them," he said.

Steve WilsonWe're trying to make this a foundational document, not just lifting out what the most important vulnerabilities are, but also providing some concrete guidance for developers about how to code securely if they're going to embed a large language model, like ChatGPT, into their applications.

Here's what your software team needs to know about the new OWASP Top 10 list for LLM.

Get White Paper: How the Rise of AI Will Impact Software Supply Chain Security

Chris Romeo, managing general partner at the cybersecurity startup investment and advisory firm Kerr Ventures, called the explosion of ChatGPT and other LLMs, including both commercial and open-source options, "a perfect storm for security."

Chris RomeoSecurity often lags cutting-edge innovation. In this case, we need an OWASP Top 10 list for LLMs to get ahead of the security issues that LLMs generate within technology products.

Chris Hughes, CISO and co-founder of Aquia, said the OWASP Top 10 approach has been a longstanding industry mainstay, giving security practitioners guidance on the most prevalent threats and risks associated with specific domains.

Chris HughesAI and LLMs are no different. As organizations quickly continue to adopt LLM's, they need a standardized set of security best practices and guidance to rally around to securely use LLMs, and the OWASP Top 10 serves as a critical resource in that space.

ReversingLabs Field CISO Matt Rose said a list of LLM vulnerabilities is needed — fast — because developers are using these models now in their workflow without realizing what they're doing.

Matt RosePeople are using AI and ChatGPT to make their lives easier without understanding the full threat landscape associated with them. They don't realize that the information they put into ChatGPT becomes part of its training data that will be used in future responses to prompts about their organizations. People are trying to work faster, but not smarter.

The first draft of the Top 10 list of LLM vulnerabilities looks like this:

1. Prompt injections, which can manipulate an LLM into ignoring previous instructions and misbehaving

2. Data leakage, where an LLM reveals sensitive information in its responses

3. Inadequate sandboxing, which can lead to an LLM opening up a system to exploitation and unauthorized access

4. Unauthorized code execution, where an LLM is exploited to execute malicious code, commands, or actions

5. SSRF vulnerabilities, which can make an LLM perform unintended requests or access restricted resources

6. Overreliance on LLM-generated content, which can have harmful consequences if a human isn't put in the loop

7. Inadequate AI alignment, which occurs when an LLM's objectives and behavior aren't lined up with its intended use

8. Insufficient access controls, which can result in unauthorized users interacting with a system

9. Improper error handling, which can lead to sensitive information being exposed

10. Training data poisoning, where training data is manipulated to introduce vulnerabilities or backdoors into a system

Some of the vulnerabilities in the draft list, including data leakage, SSRF vulnerabilities, and insufficient access controls, are similar to those found in the Top 10 lists for web apps and APIs. "If you look at the classic OWASP Top 10, injection attacks are at the top of the list and have been for many years," OWASP's Wilson said.

For a web application, he explained, a big worry is untrusted data that can come into the system that get into your SQL database or log files.

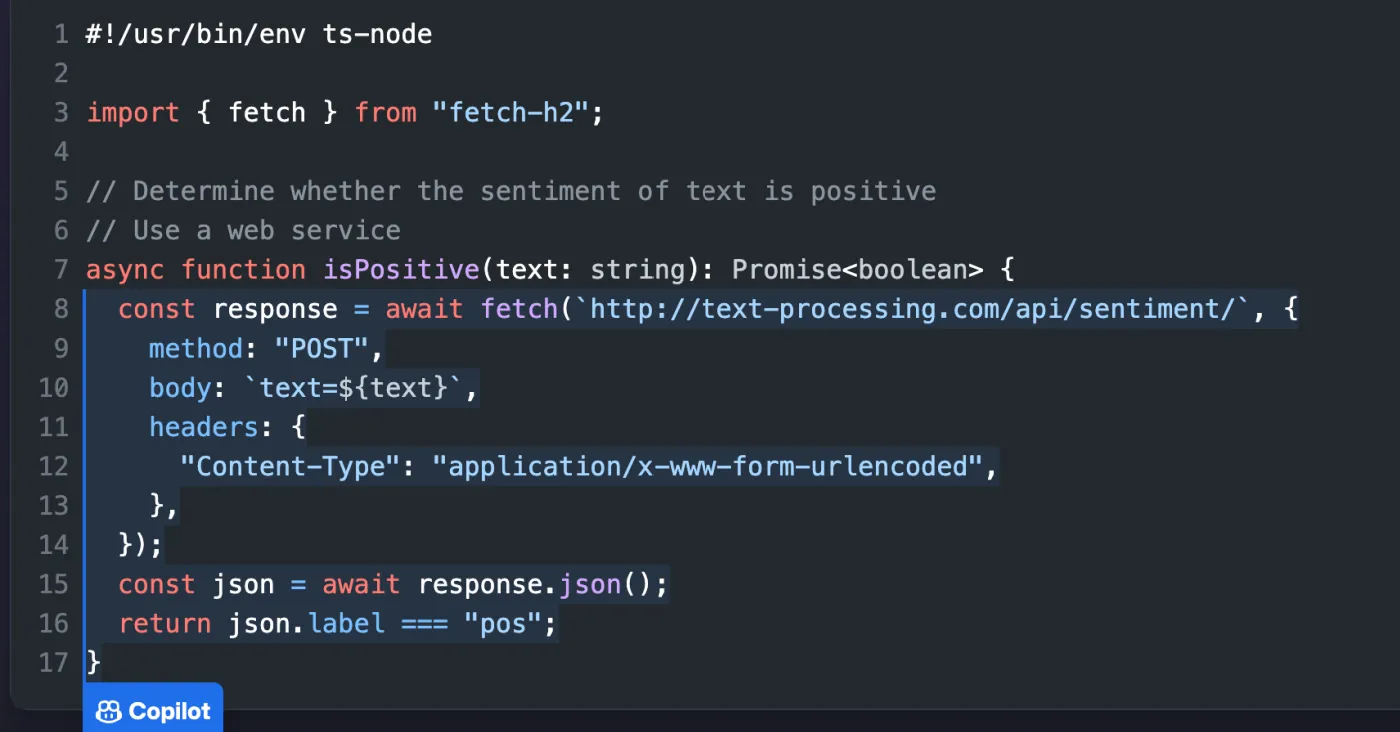

Steve WilsonIn the large language model world, the hot topic now is prompt injection. OpenAI has built in guardrails to keep ChatGPT from helping people conduct illegal activity. But people create clever versions of prompts that can skirt those guardrails to get the language model world to misbehave or give up data it shouldn't be giving up.

The AppSec community understands the general idea of an injection attack, but there's an unusual twist on it with LLMs, and that's what OWASP is trying to educate people on, Wilson said.

LLMs can also be vulnerable to buffer overflow attacks and race condition vulnerabilities, which occur when multiple threads of execution are accessing the same data at the same time.

There are also vulnerabilities that are strictly in the LLM domain, however. "LLM vulnerabilities create a new world, where there are more decision paths that exist on the server side of the application," Romeo said.

Chris RomeoTraditional web applications follow a series of instructions, and if you interact with the service the same way two times in a row, you’ll get the same result. With LLM, you are introducing more decision trees and possibilities on the processing of results eventually sent to a user.

This execution/decision process introduces new kinds of vulnerabilities that have never existed in software, Romeo said.

Another thing that won't be found on any other OWASP list: overreliance on content produced by LLMs. Scott Gerlach, co-founder and CSO of StackHawk, said overreliance on LLM content has already been shown to have consequences when used, especially in development.

Scott GerlachRecent research has shown that LLM-generated code often introduces more security vulnerabilities.

Hughes said the threat posed by overreliance cannot be overstated, and it is one of the most pertinent risks on the draft list of the OWASP Top 10 for LLMs. "While it isn't necessarily a direct technical vulnerability like some of the others on the list, it highlights the socio-technical aspects of software and LLMs," he said.

If humans, including developers, become overly reliant on LLMs and let this incredibly powerful tool do activities that they previously conducted on their own, they may see their technical skills, development proficiency, and broader expertise atrophy, Hughes said.

Chris HughesIt is like a muscle, where if you are no longer reliant on it, don't utilize it, and instead leverage an alternative, there can be the risk of your expertise and proficiency declining.

Creating a Top 10 list for LLMs will not be without its challenges. "LLM technology is constantly evolving, making it difficult to keep up with the latest vulnerabilities and threats," said Michael Erlihson, principal data scientist at Salt Security.

Erlihson added that LLMs are complex, making it challenging to identify vulnerabilities and threats. LLM development is also not standardized, he continued, making it difficult to create a comprehensive list of vulnerabilities.

Michael ErlihsonIn addition to these challenges, it is important to note that LLMs are constantly being improved and updated, which means that any list of vulnerabilities is likely to become outdated quickly.

There's also a knowledge gap that needs to be bridged. "Security people have not had any substantial training on AI, and we’re all trying to figure this out at the same time," Kerr Ventures' Romeo said.

Chris RomeoThe great thing about the OWASP project is that they have collected top experts from the field and have a burgeoning community wrapped around the creation of this initial list. The best minds in the industry are working together to ensure that the proper items are put forth.

But focusing the best and brightest on a problem can create its own set of challenges, OWASP's Wilson said.

Steve WilsonOne of our biggest challenges is going to be generating consensus. When you have 200-plus experts from a wide range of disciplines, coming down to an authoritative Top 10 list is going to be a big challenge.

Wilson noted that going forward, the group will probably shift to a slower cadence that will be more methodical and more like the other OWASP Top 10 lists, with updates every six to 12 months. He also expects to be incorporating into the list more data from real-world breaches and vulnerabilities, as other OWASP lists do now.

Explore RL's Spectra suite: Spectra Assure for software supply chain security, Spectra Detect for scalable file analysis, Spectra Analyze for malware analysis and threat hunting, and Spectra Intelligence for reputation data and intelligence.

Get your 14-day free trial of Spectra Assure

Get Free TrialMore about Spectra Assure Free Trial