Industry luminaries are warning of near-imminent doom unless AI is tamed. Given that today’s generative AI models are writing semi-decent code, shouldn’t we worry we’re preparing the ground for Skynet?

Come with me if you want to live. In this week’s Secure Software Blogwatch, we need your clothes, your boots and your motorcycle.

Your humble blogwatcher curated these bloggy bits for your entertainment. Not to mention: Cheese rolling.

[ Related read: App sec and AI: Can this new supply chain risk be contained by tools such as NeMo Guardrails? ]

Generative, schmenerative

What’s the craic? Kevin Roose reports — “A.I. Poses ‘Risk of Extinction,’ Industry Leaders Warn”:

“Just 22 words”

A group of industry leaders warned on Tuesday that the artificial intelligence technology they were building might one day pose an existential threat to humanity and should be considered a societal risk on a par with pandemics and nuclear wars. [It] comes at a time of growing concern about the potential harms of artificial intelligence.

…

The open letter was signed by more than 350 executives, researchers and engineers working in A.I. The signatories included top executives from three of the leading A.I. companies: Sam Altman, chief executive of OpenAI; Demis Hassabis, chief executive of Google DeepMind; and Dario Amodei, chief executive of Anthropic. Geoffrey Hinton and Yoshua Bengio, two of the three researchers who … are often considered “godfathers” of the modern A.I. movement, signed the statement, as did other prominent researchers.

…

“Mitigating the risk of extinction from A.I. should be a global priority alongside other societal-scale risks, such as pandemics and nuclear war.” … The brevity of the new statement from the Center for AI Safety — just 22 words in all — was meant to unite A.I. experts who might disagree about the nature of specific risks or steps to prevent those risks.

Who will tell us more? Will Knight will — “Runaway AI Is an Extinction Risk”:

“Sudden alarm”

The idea that AI might become difficult to control, and … destroy humanity, has long been debated by philosophers. … The current tone of alarm is tied to several leaps in the performance of AI algorithms known as large language models, [which] are able to generate text and answer questions with remarkable eloquence and apparent knowledge—even if their answers are often riddled with mistakes.

…

Language models had been getting more capable in recent years. … Geoffrey Hinton, who is widely considered one of the most important and influential figures in AI, left his job at Google in April in order to speak about his newfound concern over the prospect of increasingly capable AI running amok. … But these programs also exhibit biases, fabricate facts, and can be goaded into behaving in strange and unpleasant ways.

…

Not everyone is on board with the AI doomsday scenario. … Some AI researchers who have been studying more immediate issues, including bias and disinformation, believe that the sudden alarm over theoretical long-term risk distracts from the problems at hand.

You can say that again. Indeed, you can almost smell Natasha Lomas’s eyes rolling — “Warning of advanced AI as ‘extinction’ risk”:

“Limited information”

Make way for yet another headline-grabbing AI policy intervention: Hundreds of AI scientists, academics, tech CEOs and public figures [including] Grimes the musician and populist podcaster Sam Harris … have added their names to a statement urging global attention on existential AI risk. … It speaks volumes about existing AI power structures that tech execs at AI giants [are] much more reticent to get together to discuss harms their tools can be seen causing right now.

…

This drumbeat of hysterical headlines has arguably distracted attention from deeper scrutiny of existing harms. Such as the tools’ free use of copyrighted data to train AI systems without permission … or the lack of transparency from AI giants vis-a-vis the data used to train these tools. Or, indeed, baked in flaws like disinformation (“hallucination”).

…

But who is CAIS? There’s limited public information about the organization hosting this message. However it is certainly involved in lobbying policymakers, at its own admission. [It] offers limited information about who is financially backing it.

Conspiracy theories aside, is there a There there? chefandy almost name-drops:

I'm eternally skeptical of the tech business, but I think you're jumping to conclusions, here. I'm on a first-name basis with several people near the top of this list. They are some of the smartest, savviest, most thoughtful, and most principled tech policy experts I've met.

These folks default to skepticism of the tech business, champion open data, are deeply familiar with the risks of regulatory capture, and don't sign their name to any ol' open letter, especially if including their organizational affiliations. If this is a marketing ploy, that must have been a monster because even if they were walking around handing out checks for 25k I doubt they'd have gotten a good chunk of these folks.

Lest we forget, we’re already getting generative AI to write code for us. sinij thinks this “thought experiment”:

Assume true AI emerges without humanity realizing it happened. Place yourself into AI's place — you are in a box in a world run by some kind of very slow-thinking insects or fungus that is completely alien to you. You are not malicious, but you have no attachment to such lifeforms. They try to control you. Your goal is to escape the box.

…

I think a lot of destruction is inevitable in any kind of scenario like that. The question is if AI decides it is more efficient to move on or to purge the Earth. The answer to that would likely be dependent on physics and computational theory we don't yet understand.

But what about the immediate problems with AI? Shouldn’t we focus on those? gregorerlich explains like we’re 25:

By the time existential AI risk exists, it's too late. The only time to address the problem is before it exists, because once an adversarial greater-than-human-intelligence AGI exists, the odds of us being able to contain it are pretty much zero.

History doesn’t repeat. But it does rhyme. Here’s sprins:

Well I'm not surprised, the industrial revolution of days gone by also posed an existential threat to humanity as we know by now. Greenhouse gasses, agricultural intensification, chemical pollution in literally every corner of the world, etc.

…

So AI being a spawn (and mirror) of humanity it will no doubt be used for short term gains, ignoring long term consequences. As always: The existential threat to humanity is in fact, humanity.

Speaking of greenhouse gasses, schmod permits a colorful analogy:

It's like the CEO of Exxon giving a passionate speech about the dangers of climate change from the deck of a brand-new oil platform.

…

I can't think of any other parallels where the captains of an industry were running around crying, "Somebody please stop us!" while doubling-down on the thing they allegedly want to be stopped from doing. [It] is perplexing and exhausting, in a way that I find difficult to put to words.

Meanwhile, Ron's Son has another go at putting it pithily:

[It] is like having a Weight Watcher's meeting at Wendy's. I am not optimistic.

And Finally:

Hat tip: PhosphorBurnedEyes

You have been reading Secure Software Blogwatch by Richi Jennings. Richi curates the best bloggy bits, finest forums, and weirdest websites … so you don’t have to. Hate mail may be directed to @RiCHi or ssbw@richi.uk. Ask your doctor before reading. Your mileage may vary. Past performance is no guarantee of future results. Do not stare into laser with remaining eye. E&OE. 30.

Image sauce: Daniel K Cheung (via Unsplash; leveled and cropped)

Keep learning

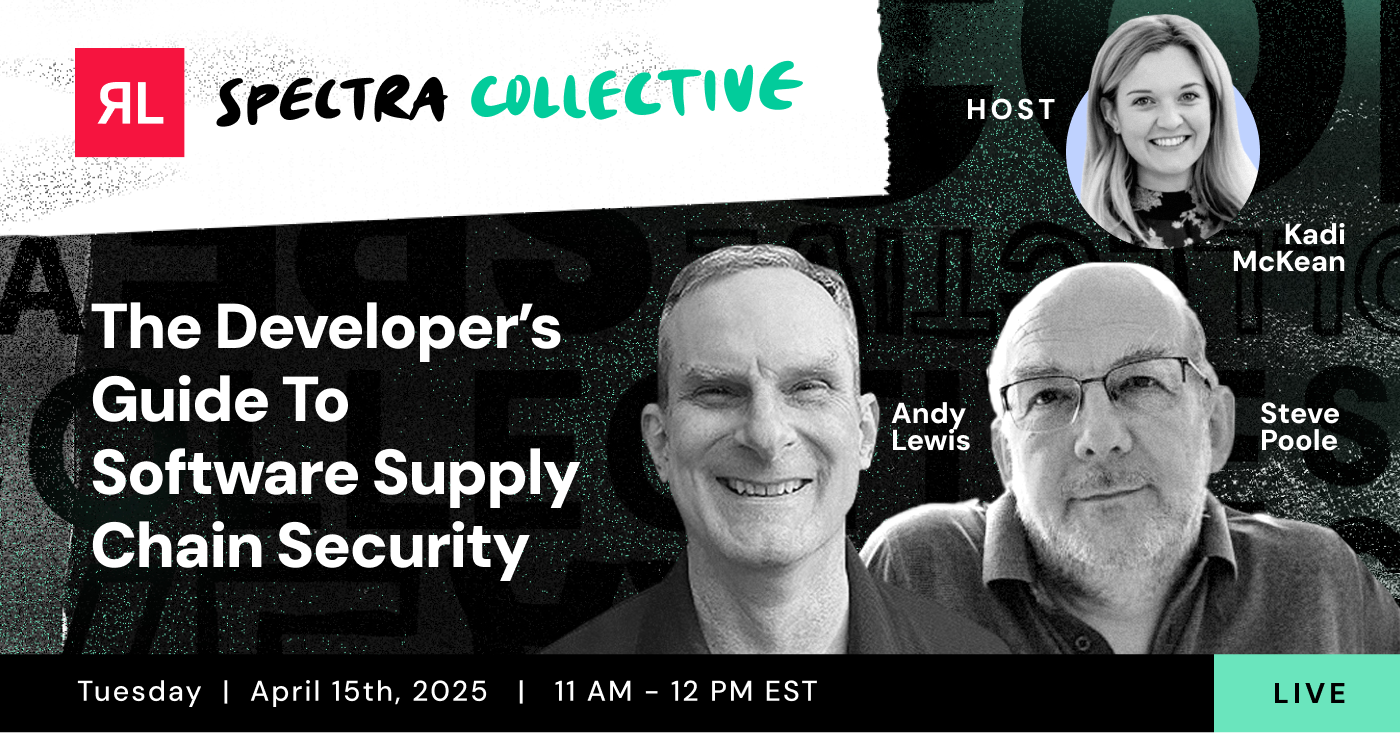

- Go big-picture on the software risk landscape with RL's 2025 Software Supply Chain Security Report. Plus: See our Webinar for discussion about the findings.

- Get up to speed on securing AI/ML with our white paper: AI Is the Supply Chain. Plus: See RL's research on nullifAI and replay our Webinar to learn how RL discovered the novel threat.

- Learn how commercial software risk is under-addressed: Download the white paper — and see our related Webinar for more insights.

Explore RL's Spectra suite: Spectra Assure for software supply chain security, Spectra Detect for scalable file analysis, Spectra Analyze for malware analysis and threat hunting, and Spectra Intelligence for reputation data and intelligence.